Research Activity 5: Spatial cognition and multi-modal situation awareness

'A robot companion's system needs to be endowed with an internal representation of 'the world' in order to reason, plan and to interact with people. We focus on representations of the world around it, needed for navigation, and representations of objects, needed for interaction. The robot has to estimate 'where' it is (or where the object is) and 'what' it is (for example 'the kitchen' for space, or 'the bottle' for objects).'

|

|

RA5 addresses the understanding of how an embodied system can come to a conceptualisation of sensory-motor data, generate plans and actions to navigate and manipulate in typical home settings. The concept formation is considered in a broad sense, i.e. the ability to interpret situations, i.e. states of the environment and relationships between components of the environment that are static or evolving over time. The robot can observe these states or be part of the evolving action itself. The robot will use different sensing modalities, and can also act in order to improve its understanding of the situation or to disambiguate its interpretation. The following questions will be studied in RA5 :

Work conducted in RA5 is related to all three Key Experiments :

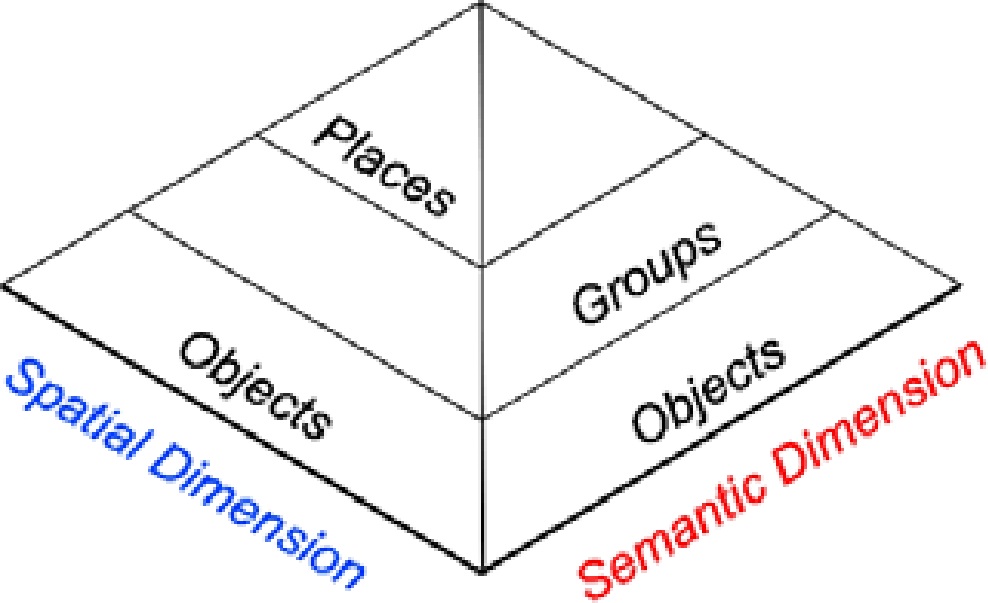

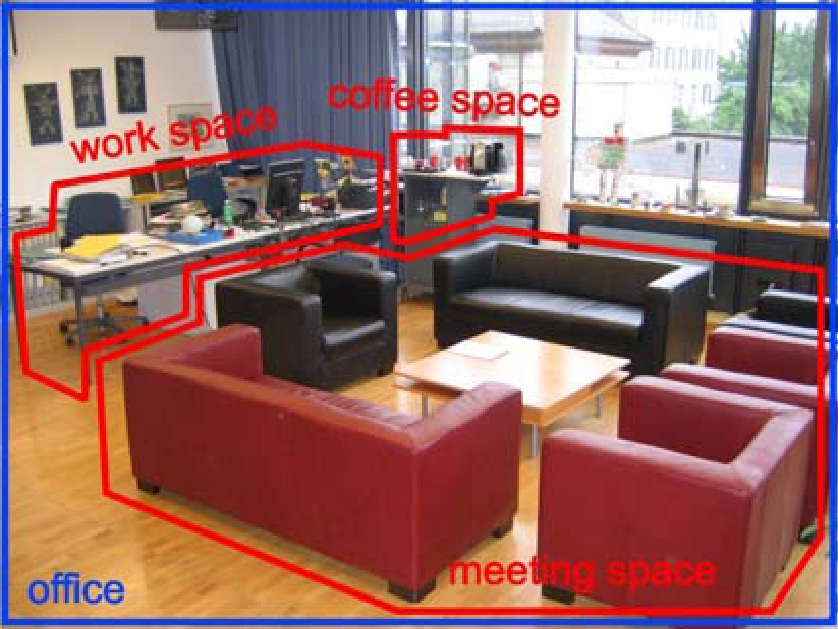

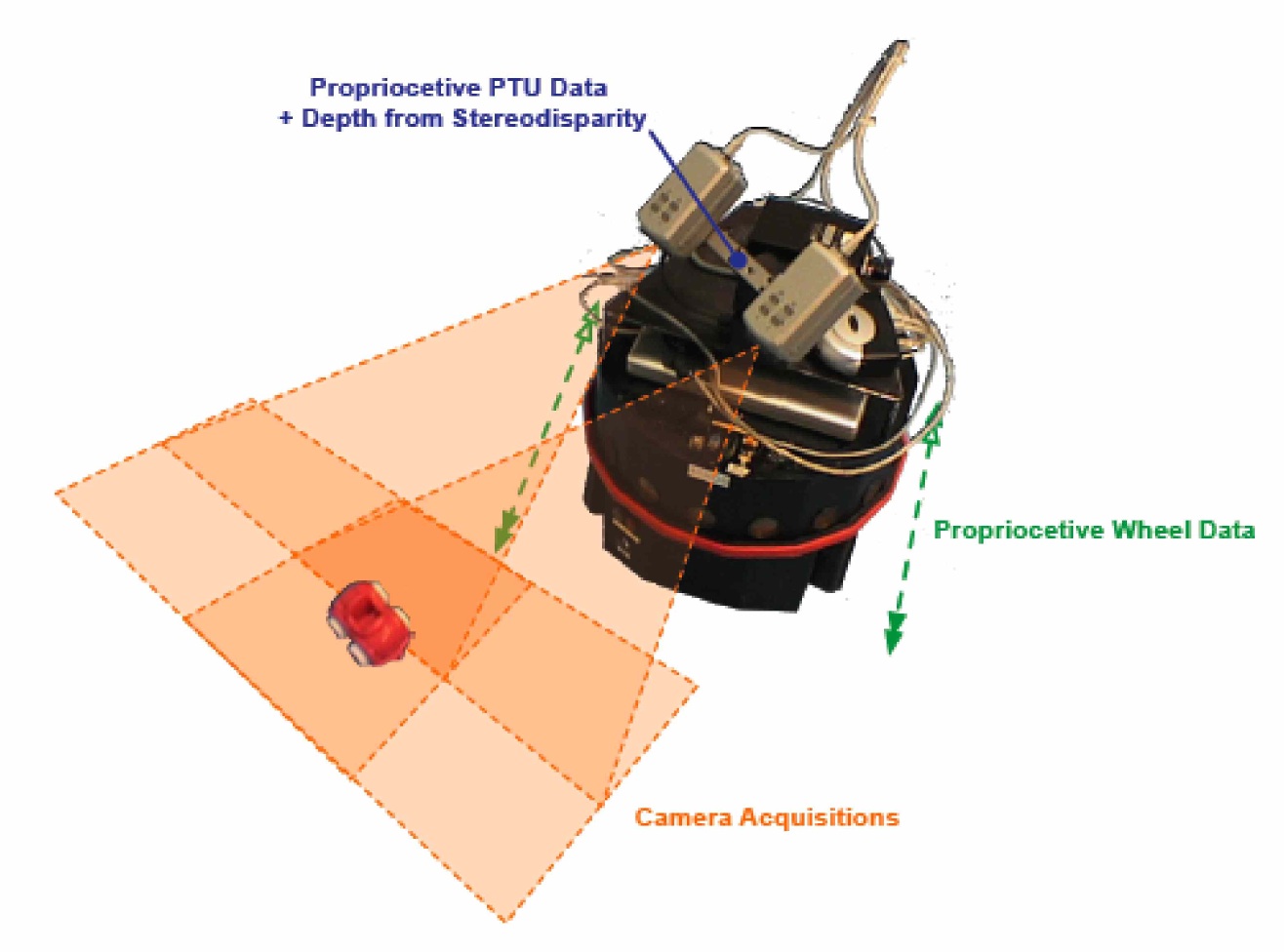

A robot uses the sensory information it perceives to identify high level features such as objects, doors etc. These objects are grouped into abstractions along two dimensions - spatial and semantic. Along the semantic dimension, objects are clustered into groups so as to capture the spatial semantics. Along the spatial dimension, places are formed as a collection of groups of objects. An example scenario - The figure depicts a typical office setting. The proposed approach would enable a robot to recognize various objects, cluster the respective objects into meaningful semantic entities such as a meeting-space and a work-space and even understand that the place is an office because of the presence of a place to work and one to conduct meetings. The research activity RA5 addresses the understanding of how an embodied system can come to a conceptualisation of sensory and sensory-motor data, generate plans and actions to navigate and manipulate in typical home settings. The research activity consists of two workpackages. WP5.1: Models of space focuses on methods for building a representation of the environment of the robot. WP5.2: Models of objects focuses on learning object categories by manipulating and showing. In the first three years of the project, novel methods were developed both for environment modelling as well as object modelling, and tested in laboratory settings. In year 4 of the project those methods were tested extensively and integrated in the Key Experiments. WP5.1 Models of space 'Models of space' focuses on methods for building a representation of the environment of the robot which can be used in the home tour scenario. This means that in a dialogue with a user, a representation of the home has to be built which corresponds to the conceptual representation of the user. Work in this workpackage is carried out by four project partners. The work concerns both novel computational models for space representations as well as user studies for modelling We studied how users, who are guiding a robot in their environment, are explaining complicated domestic layouts (narrow passages vs. open spaces) to the robot. We also describe a study on the integration of appearance based clustered representations with human feedback at the Biron platform in Bielefeld. To have a full perception-action cycle we also studied how the appearance representations can be used for navigation, and the robustness of the approach. A final probabilistic model on object based representations was formalized, and tested with real data. The step from sensory data to objects was studied by making an attentive system that combines local salient features into objects. Finally we present results on representations of temporal data, in particular sequences of simple sensor readings. The deliverable ends with a summary of contributions, a list of theses and some characteristic papers. Mobile cognitive personal assistants need to have a representation of space to be useful. Many representations of space as presented in robotic literature are of hierarchical nature: this is needed to keep the computational complexity in bounds. On the other hand, literature on cognitive systems also suggests a hierarchy in spatial relations as used by humans. For a cognitive personal assistant it is crucial that the two representations are consistent. he Cogniron project studies robotic applications in a cognitive context. 'Cognitive', because our future robots will live in a human inhabited environment where interactions and communication requires cognitive skills. In research activity 5 we focus on an internal representation of space. For cognitive robot servants, which co-operate with humans in an indoor environment, such a spatial representations must not only be suited for navigation but also for natural interaction with humans. In the last phase of the project we carried out research in four lines:

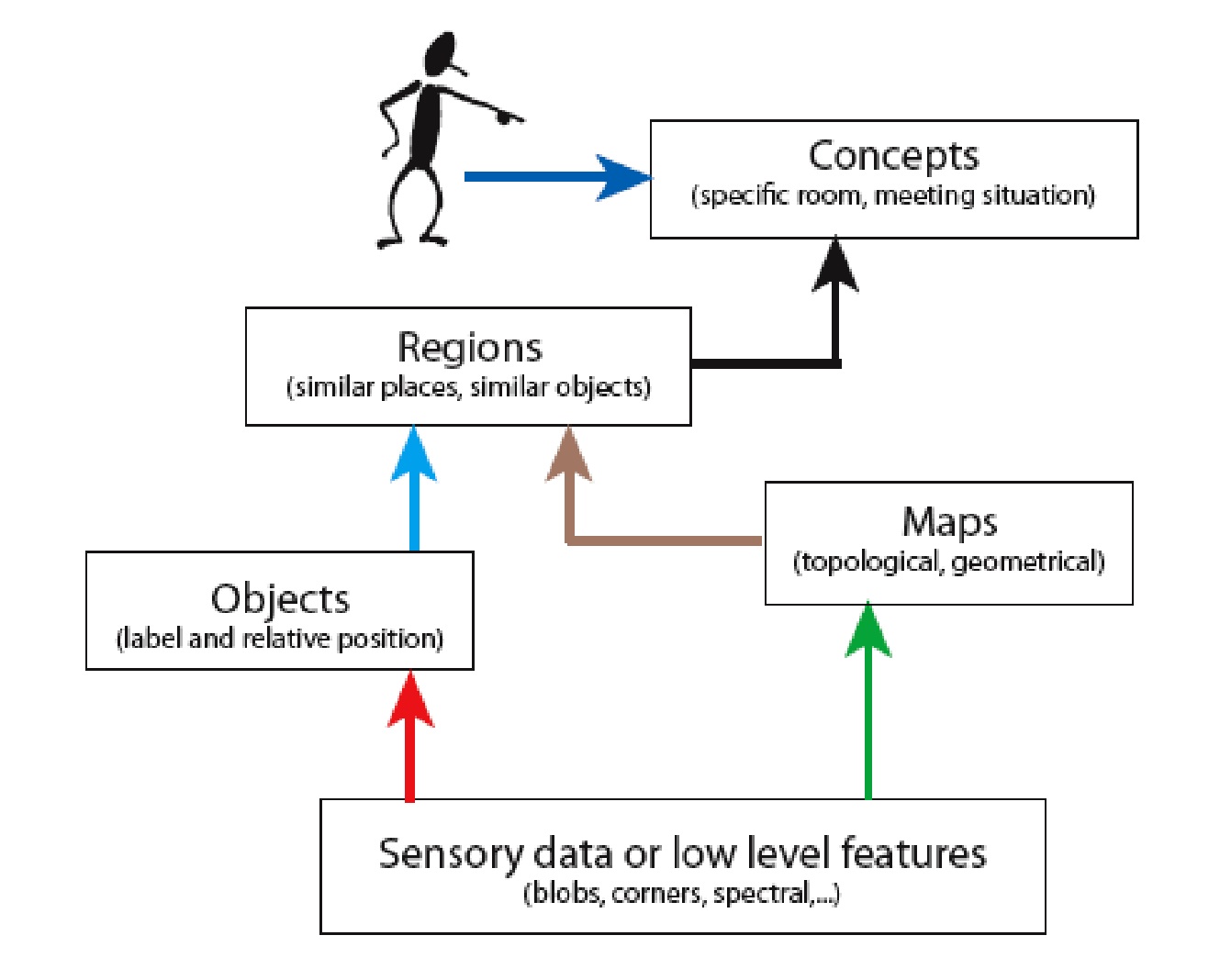

We studied different methods for making these representations, a schematic representation is given in figure 1. As is clear from the figure, a simultaneous bottom-up and top-down approach was followed. The bottom up approach starts with the raw sensoric data and extracts features from that. On the basis of these features either objects are detected, or a map is made. In the project we have restricted ourselves to range data and vision data, which are currently used mostly in robot mapping. On the basis of range data, visual data or detected objects, a classification is made in spatial sub-entities. Human input is needed in giving semantic input to the system (the semantics of the spatial sub-entities Schematic overview of activities in WP5.1. (Green)Geometrical and topological maps from vision and range sensors (UVA / KTH/EPFL) (Red) Object detection (LAAS, EPFL) (Brown) Space models based on detected objects (EPFL) (Blue) Space models on the basis of visual or metric features (KTH, UvA, UniBi) (Black) Human augmentation and user studies (KTH, EPFL, UvA, UniBi) In research activity RA5.1 we have carried out research on spatial representations for robots that interact with humans. In the project period we have contributed to the state of the art by developing novel algorithms, and by carrying out large experiments with humans. This is unique and novel in the field. Specifically our contributions are:

The contributions from the implementations and experiments in the project are:

A still unsolved issue is to make the robotic system aware of conceptual/spatial information that is given implicitly and understood amongst people depending on the context and situation in a guided tour scenario. E.g.. specifying a room by pointing vaguely towards its door or showing a room when being inside it is often accompanied by exactly the same phrase "and this is the room". The ambiguity is easily resolved by people with the help of their common understanding of the (spatial) situation and information given before. Another aspect of this is the awareness of the interaction status that is currently not considered as an information cue for the mapping subsystem. WP5.2 Models of objects The cognitive robot companion is designed to act autonomously in a human centered world. Therefore the acquisition of knowledge about the surrounding environment is one of the key competences. In order to handle concepts and knowledge of the operation environment, models in different layers of abstraction are built. Besides learning of models of space (see D5.2007.1) the acquisition of object data is a very important aspect. In phase 4 of the Cogniron project the work in this workpackage focused on the question, how different levels of object knowledge can be learned in presence and absence of a human supervisor. We worked on the following tasks presented in the workplan:

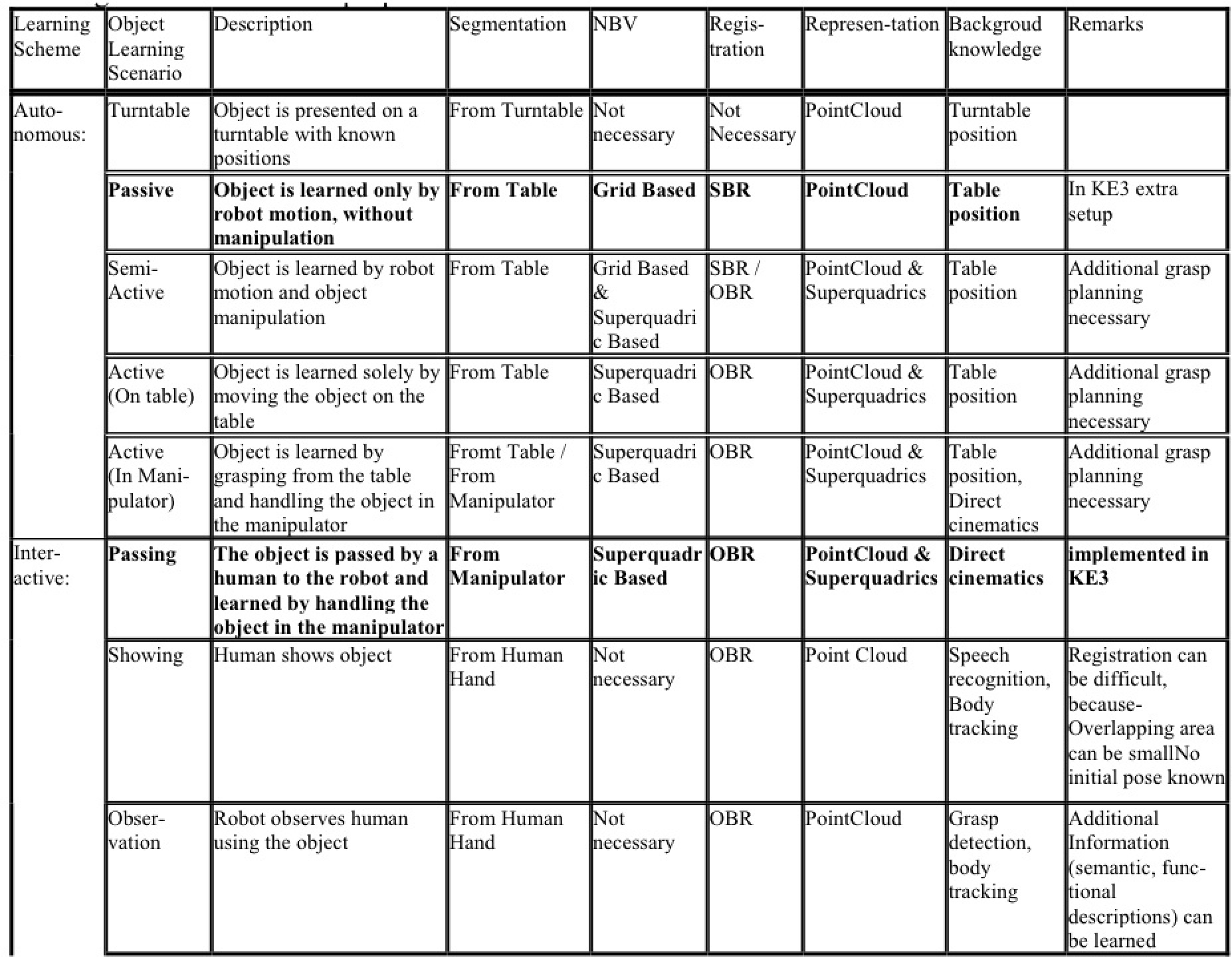

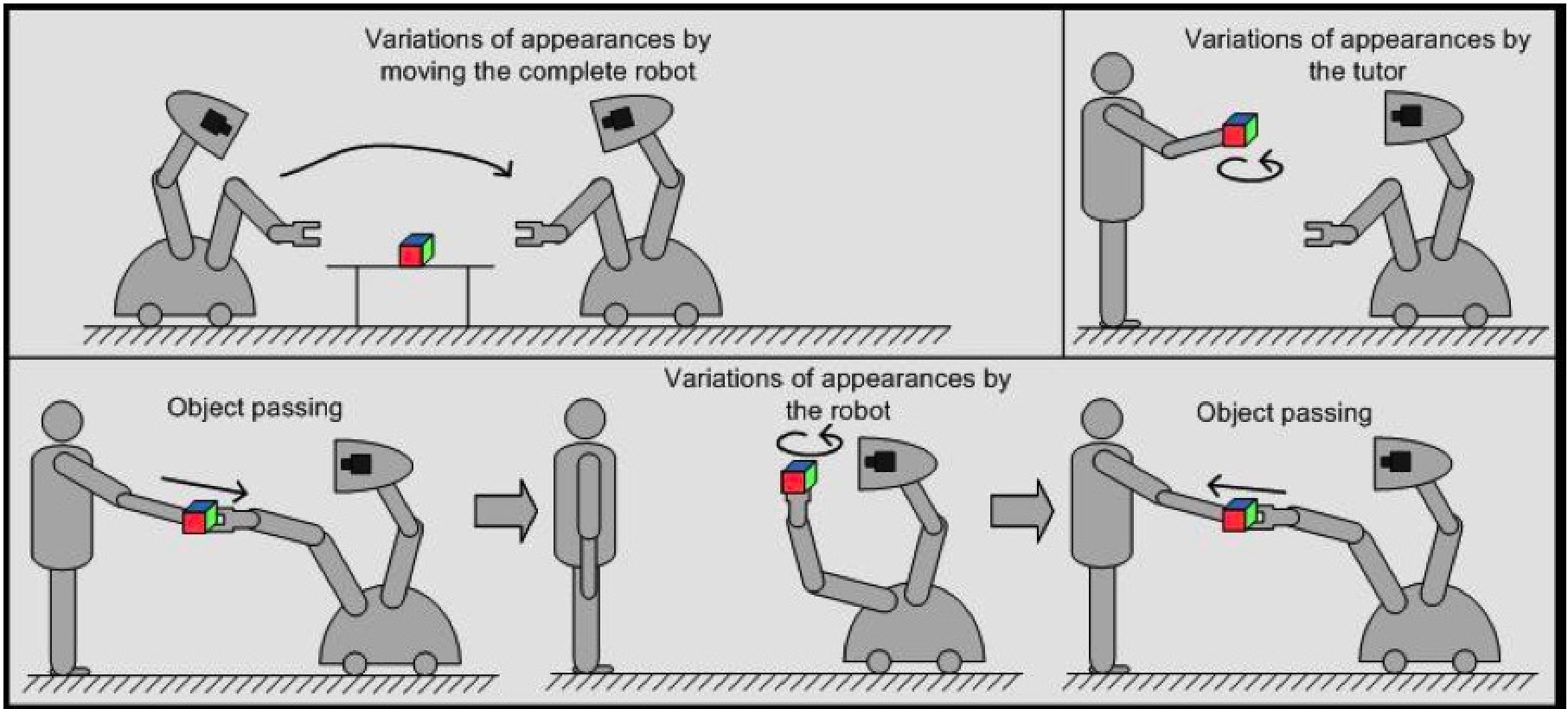

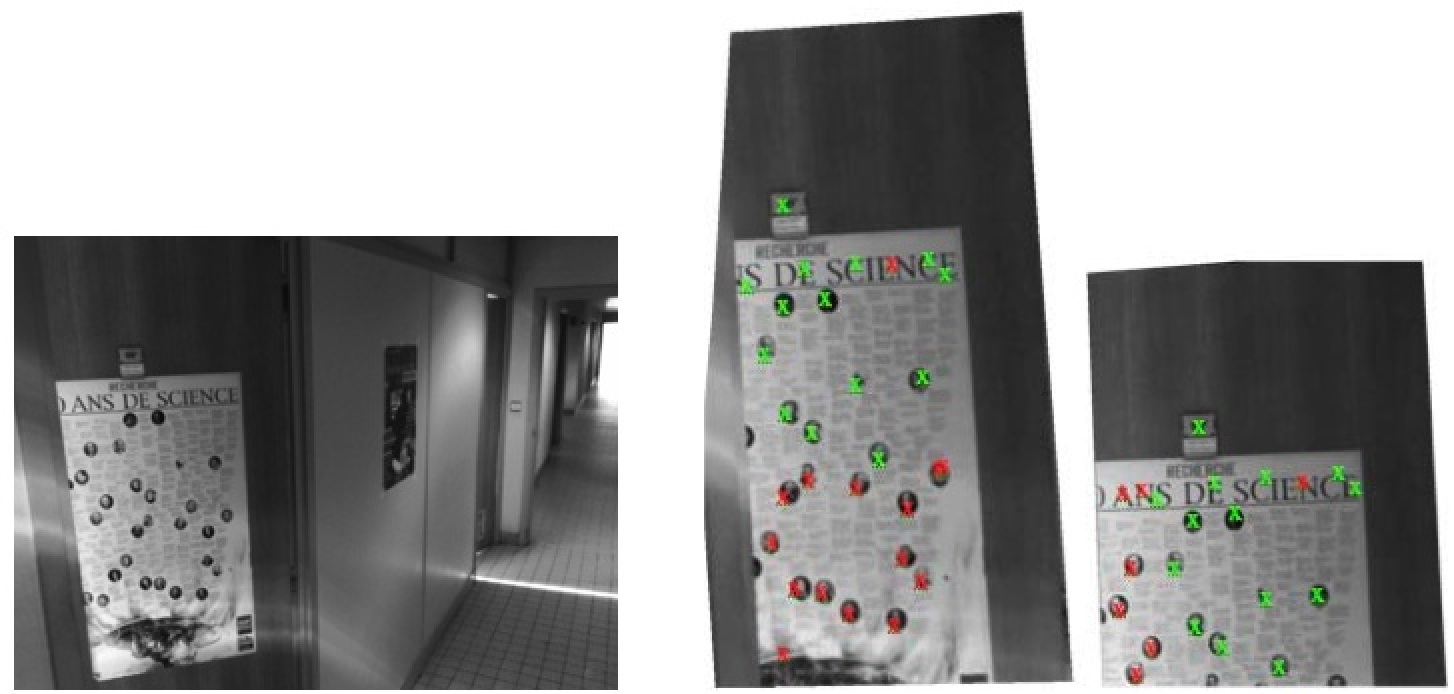

This structure reflects the different learning aspects of the introduced unified object model. WP5.2.1 deals with the categorization of different object learning scenarios and the corresponding properties and tasks. In WP5.2.2 the appearance-based approach for object detection and the geometry based approach for pose estimation and grasping are regarded in a combined manner. WP5.2.1 deals with appearance-based learning algorithms, which are able to feed the appearance and physical interaction layer of the unified object model in an open-ended fashion. several object learning scenarios (SBR: scene base registration, OBR: object based registration) WP5.2.1 Autonomous and interactive learning schemes In this workpackage basic learning schemes for autonomous and interactive learning have been studied. Theoretical models about efficient learning of different object layers of the unified object model in different stages of complexity have been developed. Besides that, methods and algorithms for different settings concerning the existence of a human supervisor have been explored visualization of different learning schemes range segmentation of an object applying the first and the second learning scheme (left) and a collection of all ten objects saved to the data bases WP5.2.2 Fusion of appearance-based methods and geometrical models Appearance based methods and geometrical models have been studied separately in the early project phases. Appearance based methods were mainly used to detect and classify objects, where geometrical models were used to estimate pose and geometry (e.g. for grasping). In project phase 3 and 4, the two approaches have been studied in a unified manner to use the benefits of both worlds. Planar feature matching from appearance knowledge WP5.2.3 Open-ended learning algorithms for object models The main goal of this work package is to enable the cognitive robot companion to learn objects and its properties in an open-ended fashion. Hereby, the focus lies on the binding of the appearance layer and the physical interaction layer to link the object model to corresponding actions of the companion. Learning sensory motor representation through robot object interaction Main achievements:

2004 -D5.1.1 Report on methods for hierarchical probabilistic representations of space RA5.1 presentation(by Ben Krose (UvA))

RA5.2 presentation(by Michel Devy (LAAS))

The movie shows how a robot can benefit from human feedback while building a map of the environment. A topological map is build using images taken in a real home environment and comparing them pairwise. Room labels provided by a human guide are used to augment this map. By computing the map online while driving through the house the robot is able to ask for feedback from the human guide. This is done by reporting a room-label when the robot thinks it is re-entering a room. The guide is then able to correct this label which the robot uses to update its map. (in case of problem, this video works with vlc) Below are only listed some of the RA-related publications, please see the Publications page for more.

|

![]()

![]()

![]()

An Integrated Project funded by the European Commission's Sixth Framework Programme

exploreGraph.wmv

exploreGraph.wmv Video-RA5: Integration of appearance based mapping and human robot interaction

Video-RA5: Integration of appearance based mapping and human robot interaction