Research Activity 2: Detection and understanding of human activity

'...A robot companion that works and interacts with humans and operated among them should have a clear understanding of humans.... The robot must have capabilities to recognize and perceive the human and reason on his activity...'

|

|

A robot companion that works and interacts with humans and operates among them should have a clear understanding of humans, acting in its visual range. The robot must have capabilities to perceive the human and reason on its activity. Indeed, understanding the human's activity forms a basis for intuitive and efficient interaction with the robot and learning from human behaviour. The owner of the robot should be identified and recognized. Research Area 2 Detection and Understanding of Human Activity explores modeling, observation and semantic interpretation of human activities in the vicinity of and in interaction with the robot companion. The focus is set on non-verbal characteristics as position, movement, pose. The research area is divided into three work packages (WPs), each situated on a different level of abstraction concerning detection of human activities. WP 2.1 deals with low level detection and realtime tracking of human body parts, with methods based of different robot sensors and specialized for different distances between human and robot. In WP 2.2 integration and fusion of detected body parts into geometric, abstract models of human body configurations is developed. Thereby, comprehensive perception of humans can be achieved. WP 2.3 extracts semantic information from this perception to understand human activities on a symbolic level which supports proactive decision making and context awareness in dialogs. Work conducted in RA2 is related to the three project scenarii : Key Experiment 1 (The Robot Home Tour), Key Experiment 2 (The Curious Robot) and Key Experiment 3 (Learning Skills and Tasks), and also to the other Research Activities.

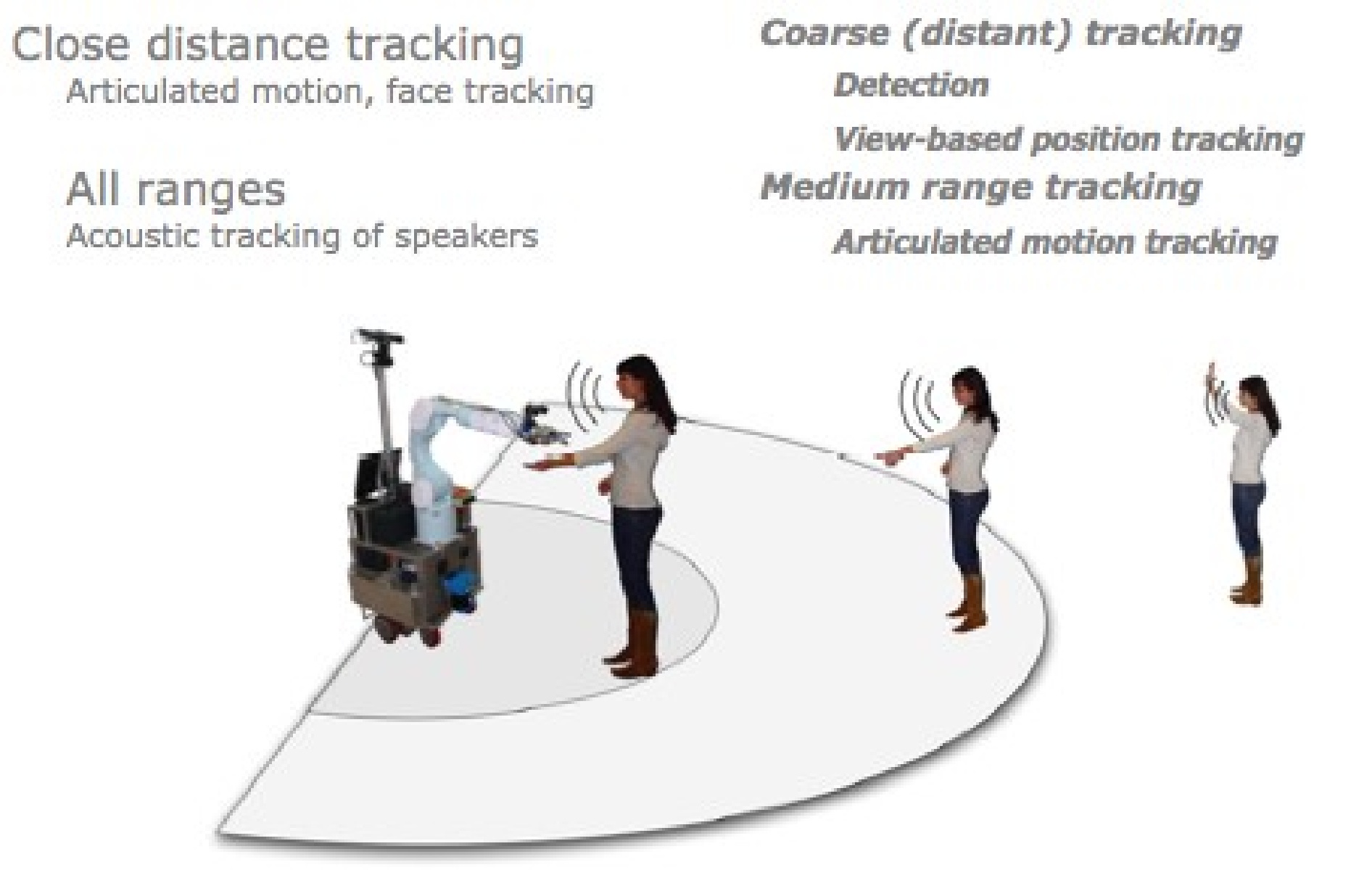

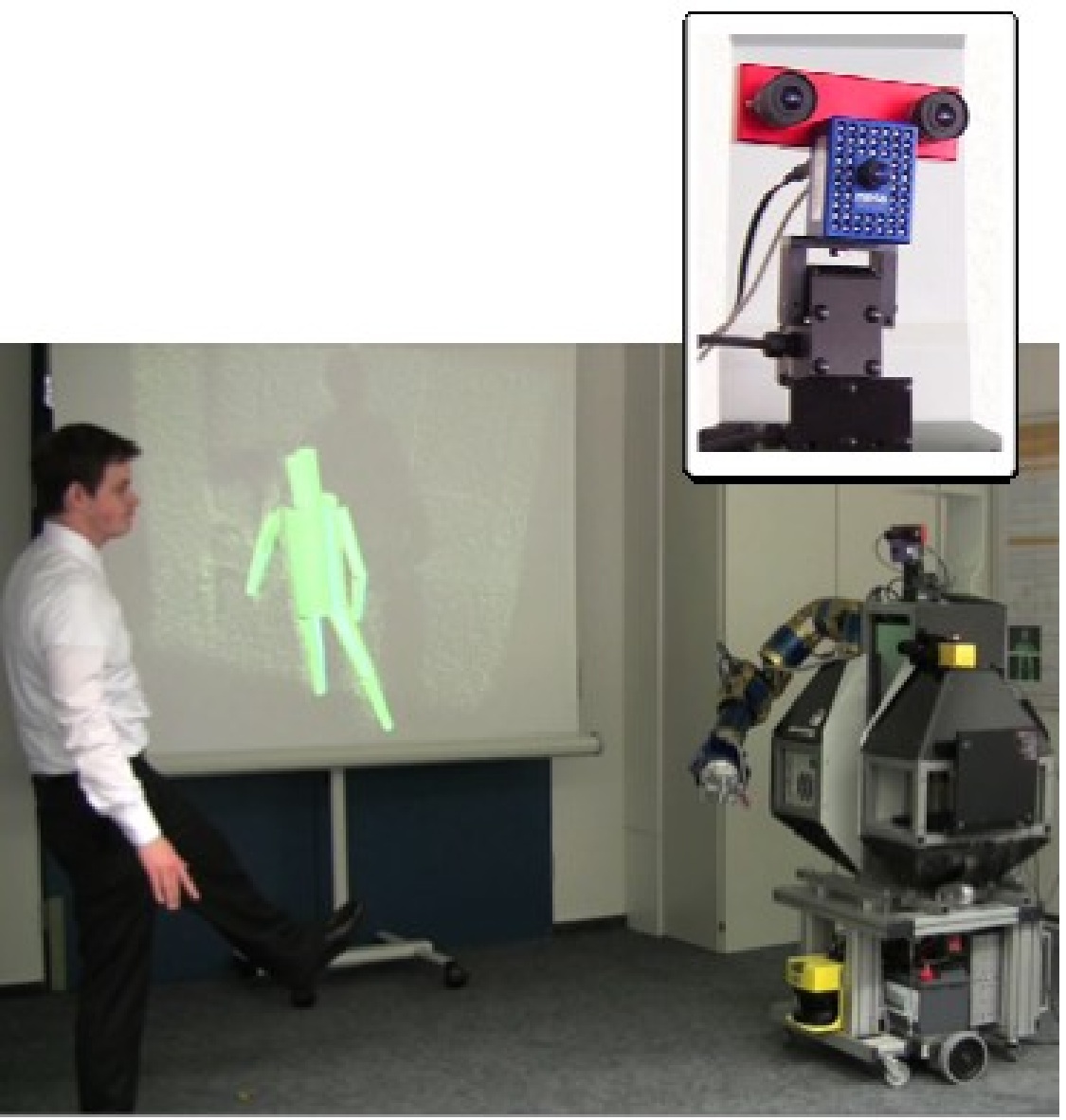

WP2.1 Detection and perception of body parts based on sensor features This work package deals with development of tracking methods for different distances between human and robot, also implying a different focus of the tracking. For humans in the far field, it is sufficient to track their location and motion as a whole. To achieve this goal, methods have been developed for different sensors to obtain a maximum of flexibility and robustness: a part-based people detection algorithm has been developed based on omnicam images, which is able to handle partial occlusions. Based on colourimages, a particle filtering approach has been developed, refined and evaluated. Perception modalities A mid-range skeleton based human motion tracking approach has been developed. It relies on depth images acquired using time-of-flight camera and laser scanner data for the initialisation. Initialisation step relies on a leg detection using laser-scanner to estimate presence and position of a potential human before starting the skeleton based tracking system. After leg-detection, a search for the head of the person based on the depth images, verifies correct detection of a present human. Finally, the skeleton based motion tracking can use head and leg positions as initialization points. Articulated human body motion can be tracked in realtime. This is especially important for the human-aware motion planning which is under development in RA3, which could enable the robot to plan its own manipulations with full knowledge of the body pose of an interacting human. Finally, the previously developed skeleton based motion tracking can use head and leg positions as initialization points. For humans in close distances, the robot needs accurate information about the body pose to plan its own motions in a safe and friendly manner. For close-range face detection the approach using Principal Component Analysis (PCA) has been further tuned and evaluated, showing superior performance compared to conventional approaches. Finally, the acoustic localization system has been brought to the state of a working prototype and the corresponding localization strategies have been developed further. Early experimental assessment of the whole system has shown promising results. WP2.2 Human body model: integration and fusion WP2.2 is concerned with development of models and fusion methods for tracking of humans and their body pose. This is important because a robot should never rely only on one modality for tracking of humans; a robot possesses different sensors which offer different pros and cons, thus combination of these modalities leads to more accurate and also more robust tracking output. The target is to integrate tracking information from all available sensor sources (see WP2.1) and fuse them within one framework. WP2.2 forms the link between dedicated tracking methods in WP2.1 and the interpretation of human movements which takes place in WP2.3. During the first phase of the project, a geometrical model for the human body has been set up. This model consists of a hierarchical composition of generalized cylinders, and is extendible as well as parameterizable. This means that this model can be extended with a e.g. a hand model, or a more accurate head or leg/feet model without affecting the existing model and algorithms. A common human model has been defined, and all algorithms are supposed to work with the same model and raw data. This enables on the one hand evaluation and comparison, and on the other hand facilitates the integration and fusion of all algorithms. Two competing fusion approaches have been developed: A multi-hypotheses and a single hypothesis fusion framework. They are used and evaluated for fusion of different available tracking information. A multi-hypotheses fusion algorithm based on a geometric body model involves projection algorithms and particle filtering techniques. It has been extended to also include stereoscopic data, which improved the fusion result especially in case of occlusions in parts of the sensory input channels. The likelihood function which is used combines model contours, colour patches, as well as a Mahalanobis distance measure in 3D. For further integration, a new functional "root" module has been developed which fuses information from the different modules dedicated to human detection and tracking: the interactively distributed bayesian multi-object 3d stereo-based tracker dedicated to the human upper body parts extremities i.e. hands and head, the detector based on 2d laser range finder dedicated to human legs and the motion capture system based on a swissranger device. This module centralizes all human body part localization and can both compare as well as merge the inferred 3d information Fusing object and body tracking The single-hypothesis fusion framework which is derived from the 3D tracking approach based on the Iterative Closest Point algorithm developed in WP2.1 involves modelling the different sensor input sources according to their reliability. With these models, which have been derived empirically based on an exhaustive evaluation, the different tracking approaches can be fused: 3D point cloud based tracking, 2D image based face and hands tracking, 3D leg tracking with the laser scanner. Then, the Iterative-Closest- Point based tracking approach has been extended for tracking of variable kinematic chains. This is useful for manipulative or transport actions, where objects of medium or large size are carried by the human. Within this approach, an object model object is attached to the human kinematic chain (usually at the hand), and the kinematic system consisting of human (arm) and object is tracked as a whole during the manipulation or transport. Fusion of 2d and 3d information dedicated to person tracking has been carried out within the ICP-based tracking framework. In the last project phases, skin color features from color images have been used for fusion within the 3d tracking. During the last phase of the project, this has been extended to arbitrary image features on the human body that can be detected and tracked in the camera image. An important thing is that software is made available for the communauty through voodoo web address (http://wwwiaim.ira.uka.de/users/knoop/VooDoo/doc/html/). WP2.3 Context based interpretation and classification of activities Strongly based on the results of WP2.2, the work in WP2.3 is concentrated on the interpretation of human motion. It is a crucial ability for a cognitive companion robot to detect and recognize activities an observed human is currently concerned with. This ability is especially necessary for interaction purposes, to enable the robot to be proactive and to decide how and even whether to engage in interaction with his human partner. The methods developed within this WP interpret human motion based on the articulated body model provided by WP2.2 and estimates the current activity of the observed user. The approach for the human activity recognition based on articulated 3d body tracking works in 3 steps. The body model which results from the tracking is used to calculate a set of so-called intrinsic features for the recognition, e.g. absolute and relative positions and velocities of body parts, angles between limbs, periodicity and major direction of motions etc. Furthermore, additional extrinsic features like information about recognized objects in the scene can be added to the feature set. A new approach for the interactive integration of a user into the activity trainings process has been developed. As the training of a new activity is normally an intended process, it is feasible to employ the user to guide the training process and to validate its results using his human background knowledge. To provide such interactive options, a new approach to the training process has been developed. The complete feature set has been structured into a hierarchical taxonomy, which is based on the human body and the different types of features which are used for the developed activity recognition. A mapping has been established between the elements of the taxonomy and possible user input, so that the user can do a preselection of features which are processed in the automatic feature selection step afterwards. Without user intervention, the automatic feature selection works on the complete, unchanged feature set. The user can also intervene repeatedly by revising the results of the automatic feature selection and deciding to repeat this step on a refined feature set. In collaboration with RA3, new training data for further evaluation of the activity recognition has been prepared. Different non-experts were asked to perform a table-laying task and this performance has been recorded. Following the general goal to include more contextual knowledge into the recognition of actions from a robot's perspective, the analysis of hand trajectories has been extended towards an object-centered analysis. Instead of solely discerning tracking from pointing by incorporating the visual context in the vicinity of the tracked manipulating hands, the focus was on a more general scheme that allows to recognize sequences of action primitives using a probabilistic framework on the basis of hidden Markov models (HMM) accounting also for varying view points. On the basis of previous work on tracking the human hand and manipulated objects a framework has been developed to compute object- and handrelated features and in the course discern different action primitives. integrating multi-sensor 3D-body tracking and activity interpretation (usable with any 3D time of flight sensor + optimal color camera setup) Exploring the relationship between speech, gestures and the demonstration of home activities in a HRI scenario was done. More specifically: Two user studies were carried out: The first experiment studied the way people's natural production of gestures to explain a home task could change in relation to robot's feedback concerning its understanding of the human teacher explanations. The second study aimed at investigating the possible effect that changing the way the demonstration task should be performed (laying the table) would impact on the efficiency of gesture recognition rates of the system being developed.

2004 - D2.1.1 - Report on human tracking and identification RA2 presentation(by Rudiger Dillman)

RA2: IPA human detection module

RA2: UKA human activity recognition module with Martin Below are only listed some of the RA-related publications, please see the Publications page for more.

|

![]()

![]()

![]()

An Integrated Project funded by the European Commission's Sixth Framework Programme