Research Activity 7: Systems level integration & Evaluation

'...Key Experiments are derived from the research activities, and serve as integration platforms for their results. Each Key experiment focuses on one or more fundamental abilities of a cognitive robot...'

|

|

The COGNIRON project is organised along 6 Research Activities. In order to demonstrate their outcomes and results in an integrated fashion, a seventh Research Activity (Research Activity 7) has been introduced. Research Activity 7 is a specific project activity, as it concentrates on the integration of several robot functions and their experimentation and evaluation in the context of well-defined scenarios, or Key Experiments, that enable to exhibit the cognitive capacities and to assess progress. The three Key Experiments are:

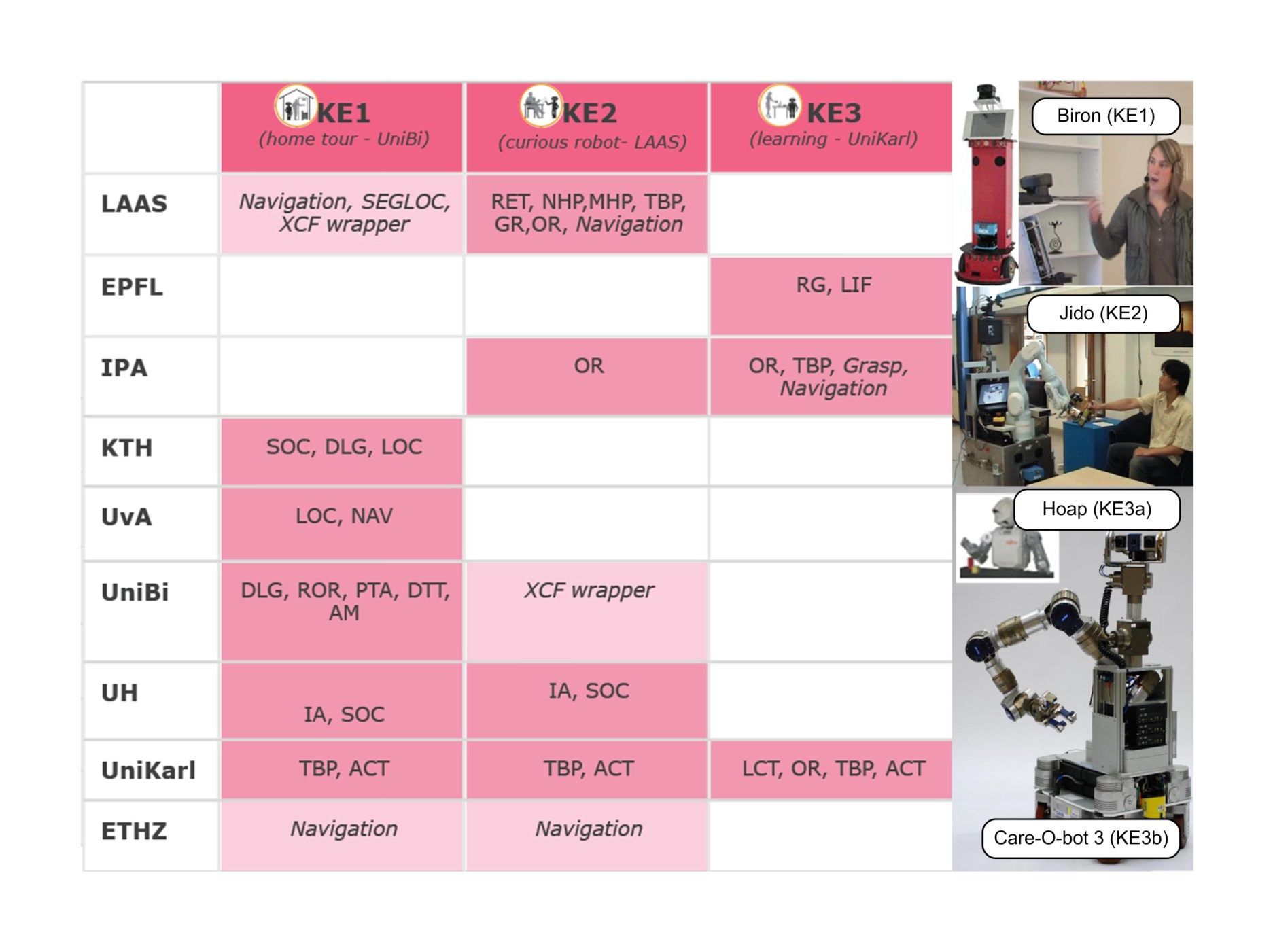

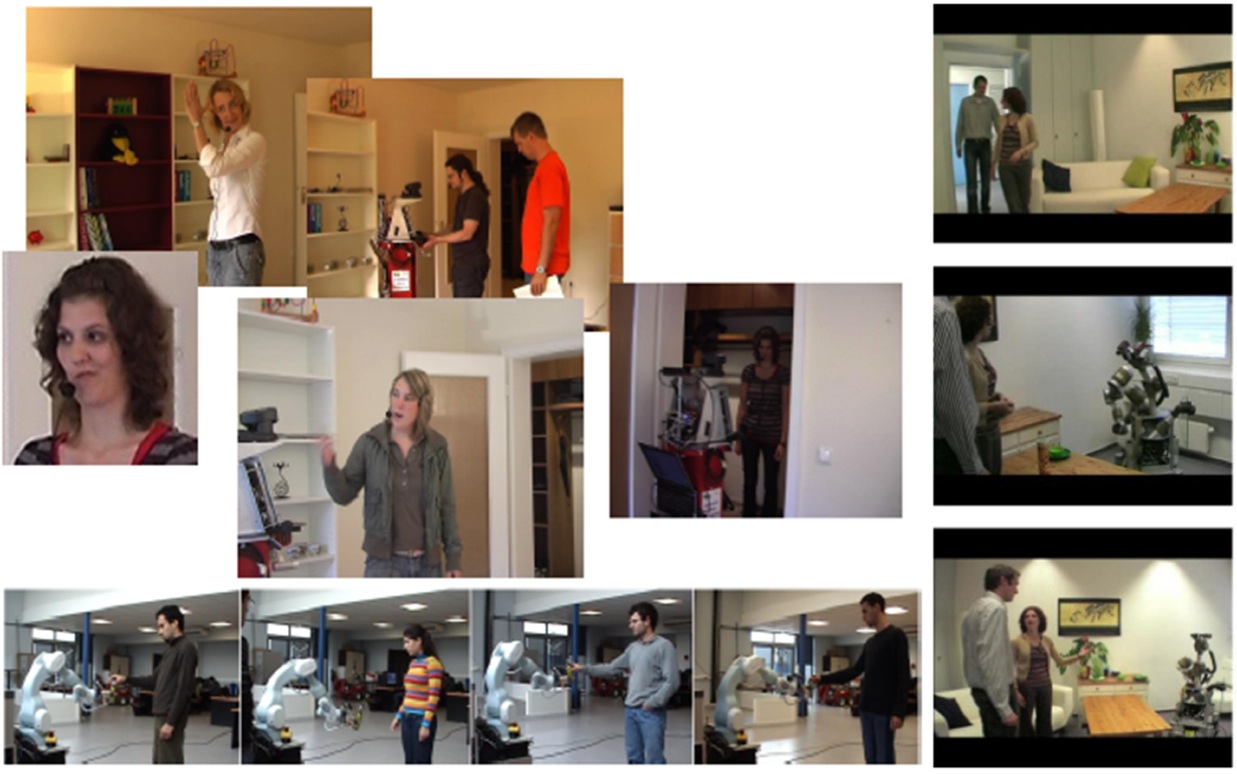

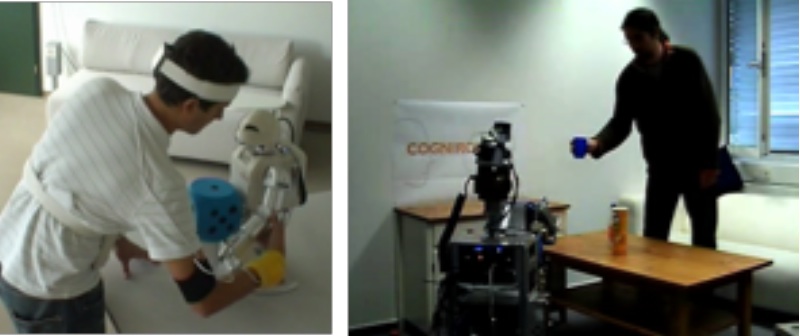

In this research area the three key experiments (KE) were implemented and tested. The key experiment settings are four robots realizing scenarios which are instantiated and detailed by so-called scripts. These describe certain sequences of possible robot interactions with the environment (including humans). Within the KEs, the COGNIRON functions (CF) were studied in the context of the research areas (RA) of the project. The COGNIRON functions are implemented as one or several software services which are smaller sub-components. The robots accomplish their missions based on a set of the services, including supporting services which are in some cases not a direct outcome of any RA, but needed to realize the scenario. A large effort in WP7 was devoted to development and implementation of these components and to the integration of the COGNIRON functions The key experiment robots and partner interactions based on the COGNIRON functions, services and supporting services. Please refer to the RA7 deliverable 2007 for descriptions of the function abbreviations. WP7.1 - Evaluation of functions and Key Experiments The objectives of this work package were to evaluate the key experiments based on criteria that reflect technical evaluations and evaluations from a user's perspective and to report on the results in a joint document. The four robots of the three key experiments were equipped with functionalities that are necessary to run the scripts of the individual KE. All scripts were recorded as videos with the real robots acting according to a storyboard that was specifically designed for the evaluation from a user perspective (see also the RA3 documents). In some cases the robots were remotely controlled since the final developments of critical functions were still in progress. Those functions that were ready and testable on a technical level were evaluated by data sets, small (selected) script runs and some further conceptual estimation of the scalability and stability. Some impressions of the evaluation activities of all three key experiments are shown in the figure below. All evaluation results were collected and consolidated into the final RA7 deliverable (D7.2007). Some impressions of the evaluation activities in WP7 WP7.2 - Key Experiment 1: The Robot Home Tour Objectives The key-experiment KE1 "Robot Home Tour" focuses on multi-modal human-robot interaction to learn the topology of the environments (apartments) and its artefacts, the identity and location of objects and their spatial-temporal relations. The objectives of KE1 as a part of RA7 is to provide a scenario to study dedicated research question (with a focus on RA 1, 2, 5, and 6) and as a test-bed for the evaluation of developed approaches. The robot platform in KE1 is BIRON. In the last project phase, KE1 had a special emphasis on evaluation with a combination of live user trials and video studies. Therefore, the major objectives can be summarized as (i) to implement an evaluation- ready system and to actually evaluate it, and (ii) to sustain and ensure a life time of developed approaches and generated data beyond the end of the COGNIRON project by supporting dissemination and training activities. Scenario A robot, taught and shown around by a human, discovers a home-like environment and builds up an understanding of its surroundings. The robot engages a dialogue with the human for naming locations and disambiguating information. The 'Home Tour' key experiment demonstrates the dialogue capacities of the robot, and the implementation of human-robot interaction skills as well as the continuous learning of both spaces and objects. BIRON in the robot house State of the setting The KE1 has been implemented a release strategies with three different release threads: Evaluation, Training, and Final. All these threads have been finalized and full-integrated system evaluations have been carried out. A video study focused on the character of the robot and compared extrovert and introvert robot behaviour in the home tour setting. Three iterations of user trials with subjects of decreasing familiarity with robots have been conducted and compiled into a corpus of interactions with an autonomously operating robot. Cogniron functions shown in KE The cognitive function being demonstrated in KE1 are

WP7.3 - Key Experiment 2: The Curious Robot Objectives The objectives of this work-package are the final and full implementation and demonstration of Key Experiment 2 with two main underlying scientific issues:

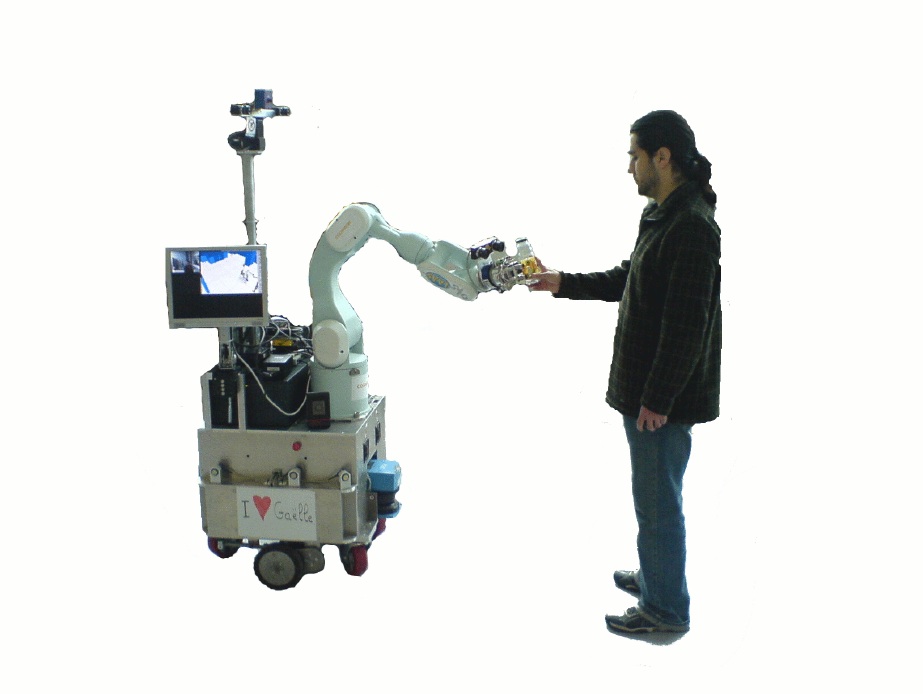

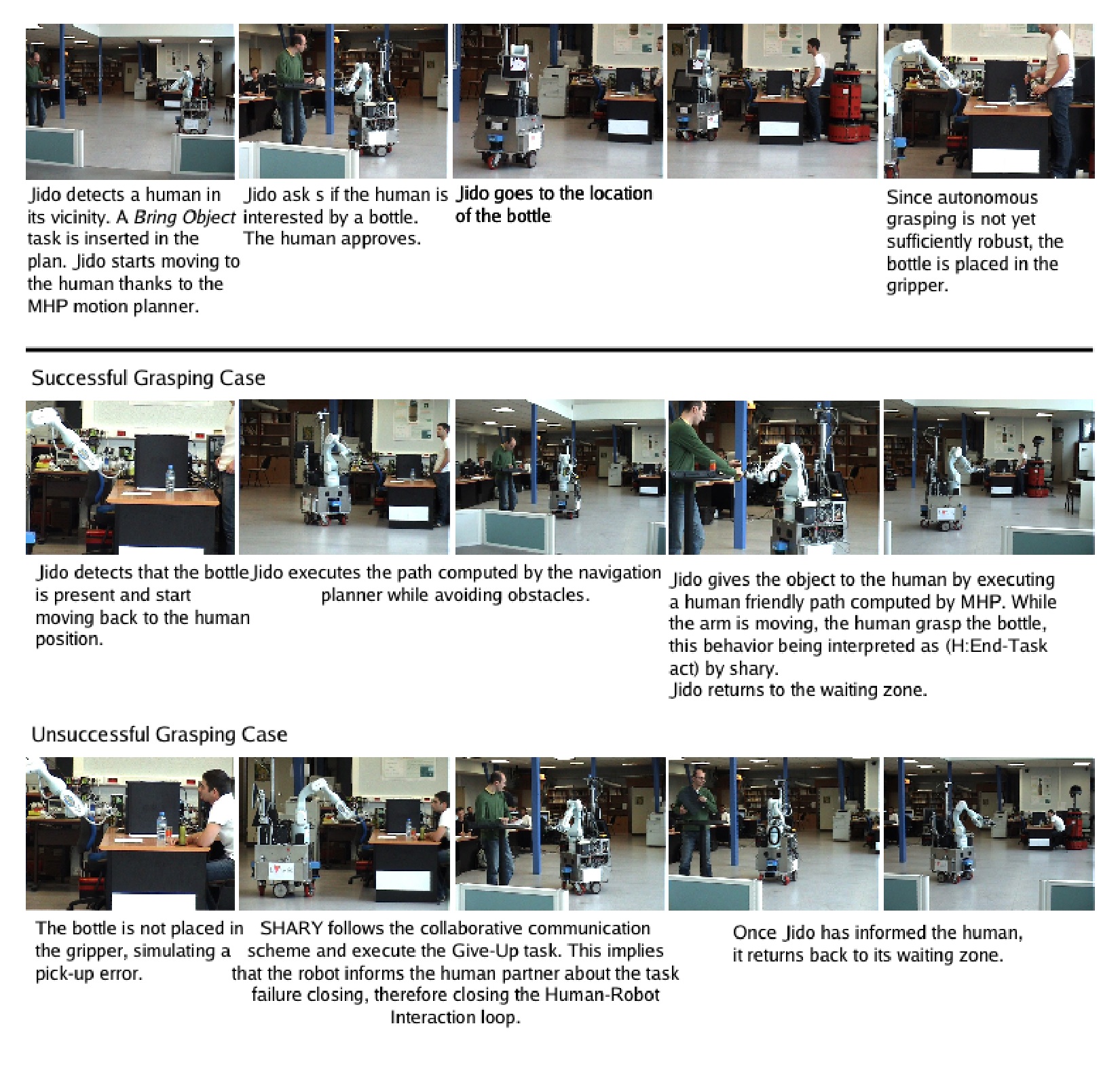

Scenario A robot in an indoor environment has identified and is observing a person in the room and interpreting its activity. It identifies some potential needs from the person, for instance a drink. It then interrupts its own task to answer to the person's needs by fetching the object. The robot thus anticipates on a situation that may occur, and acts to facilitate the future action of a person. The 'Curious Robot' key experiment allows the demonstration and assessment of initiative taking and knowledge acquisition, as well as intentionality object recognition and close interaction with humans. The robot Jido handing out a juice bottle to Luis State of the setting The challenge in KE 2 as part of the RA 7 is the capacity of the robot achieve a task based on explicit reasoning on its abilities and on the presence, state, needs, preferences and abilities of the person with which it interacts. During the last project phase, we enhanced the robot equipment, extended its functional and decisional abilities and established an incremental development and illustration process. We have also developed a set of debugging and visualization tools in order to exhibit the data structures and the knowledge that are manipulated explicitly by the robot. an example of a fetch-and-carry task in interaction with a person The focus and the main contributions come essentially from RA 2, 3, and 6 with contributions from RA 1 and 5. For a complete description see Deliverable D7-2007. Cogniron functions shown in KE The robot has been endowed with a set of Cogniron Functions and a set of complementary services. The integrated Cogniron functions are:

A complete robot software architecture has been implemented and is used for several situation involving fetch-and-carry tasks in presence of humans in an apartment-like environment. The robot is capable to interact and to hand objects to persons in various situations. Efforts have been devoted in order to endow the robot with the ability to performs its tasks in a legible and acceptable manner. WP7.4 - Key Experiment 3: Learning skills and tasks Objectives The objectives of this work package are the final and full implementation and demonstration of key experiment 3: Learning Skills and Tasks. The script of this experiment had to be updated and more precise definition of the Cogniron functions were needed to realize it. The key-experiment is split into two different scripts and will be presented on two different robots. This was decided by the consortium due to the fact that two different learning methods are subject to research and that the robot used for the second script in current development and therefore it is not yet available. The new robot (Care-O-bot 3) has important CPU capacity and will possess a most modern sensory-actuation setting. The first script is concerned with Learning Skills: "Arranging and interacting with objects," while the second script is concerned with Learning Tasks: "Serving a guest." In the first script the topic of learning through imitation is investigated while in the second script learning on elementary operator level is the focus of interest. Scenario A person makes a few demonstrations of a certain task to a robot. During the demonstration the user possibly explains his actions with vocal comments. While watching the demonstrations the robot learns the relevant parts of the task (e.g. geometrical relations between objects) and new skills such as object-actions relations. Once the demonstrations are finished the robot tries to repeat the task on the basis of what it has learned, with questions to the demonstrator on how best to complete the task. The human instructor may correct the robot's action if asked or needed or provide additional demonstrations of the same task to enhance the robots knowledge on the task at hand. The 'Learning Skills and Task' Key Experiment allows the implementation and assessment of abilities related to learning skills, tasks and object models, dialogue, and understanding human gestures. Learning Important Features of the Task and Object recognition and tracking KE 3a Arranging and Interacting with Objects The script KE 3a aims at evaluating the CFs CF-LIF and CF-RG (services imitateWhatTo, imitateHowTo and imitateMetric from RA 4). The software components are developed by EPFL and UH. The scenario includes a human demonstrator and a robot imitator. The demonstrator stands in front of the robot and demonstrates a simple manipulator task to the robot by displacing simple objects such as wooden cubes or cylinders of different colours. The demonstrations can be performed either by using motion sensors attached to the body of the demonstrator or by moving the robot's arms (kinaesthetic demonstration). Once a demonstration is finished the robot tries to reproduce the task. After each reproduction, the demonstrator evaluates the robot's performance at fulfilling the task's constraints and decides whether a new demonstration is required or not. The scenario finishes when the robot is able to generalize the skill correctly, i.e. when it reproduces the task correctly when faced with different initial configurations of the workspace. KE 3b Learning to Serve a Guest

State of the setting The new hardware of the Care-O-bot 3 that is used in KE3 was released. The two robots of KE3 were programmed such that they are able to run services that relate to needed Cogniron functions to run episodes of the scripts. Last implementations, optimization and testing took place. The two robots were run at EPFL and IPA. The learning function CF-LCT was implemented and tested off-line on another setting at UKA. This was decided due to the fact that the recognition abilities did not provide information rich enough to display the capacity of the function. Evasive sensor equipment at UKA was used to test these parts. Cogniron functions shown in KE The Cogniron functions that are integrated on the robot for the first script of KE3 are:

The test results are given in the deliverable D7.2007. They show that observational learning of e.g. simple pick-and-place tasks is possible using the approach developed in Cogniron. Furthermore, it is shown how the selection of different fitness functions influence the learning result. In order to run the second script the new Care-O-bot 3 was programmed to include the following functions:

2004 - D7.1.1 Specification of the 3 key experiments RA7 presentationby Jens Kubacki (IPA) KE1 Presentation by Marc Hanheide (UniBi) KE2 Presentation by Rachid Alami (UniBi) KE3-A Presentation by Aude Billard (EPFL) KE3-B Presentation by Martin Haegele (IPA) A collection of videos could be found there . |

![]()

![]()

![]()

An Integrated Project funded by the European Commission's Sixth Framework Programme