Research Activity 3: Social Behaviour and Embodied Interaction

'...A robot engaging in social interactions with humans, such as a cognitive robot companion, needs to be aware of the presence of humans, of their willingness to interact, and acquire the appropriate way of approaching and maintaining spatial distances to them...'

|

|

Robots should acquire appropriate ways of approaching and maintaining spatial distances to people, in order to respect their personal and social spaces. Ultimately, this will lead to robots that are able to learn, through interaction, the personal spaces of people around them, adapting to individual preferences, as well as differences that can be found in particular user groups. In order to engage in social interactions, the Robot Companion needs to behave in a socially acceptable manner that is comfortable for human subjects while at the same time carrying out a useful task as identified in the Cogniron Key Experiments. Behaviour and interaction styles studied in this Research Activity include verbal as well as non-verbal behaviour. This research activity will in particular focus on the robot:

Work conducted in RA3 is related to Key Experiment 1 ('The Robot Home Tour') and Key Experiment 2 ('The Curious Robot') and also to the other Research Activities WP3.1 Personal spaces and social rules in human-robot interaction WP3.1. develops social rules for implementing socially acceptable, non-verbal behaviour in robots with respect to social distances in human-robot and robot-human approach scenarios, including handing over an object.

The findings from HRI experiments performed for WP3.1 have confirmed previous finding from HCI and for zoomorphic robots (cf Bailenson et al. (2003) & Breazeal (2004)) that people respect the interpersonal distances for robots. Our experimental findings indicate that people's preferences for robot approach distance are affected by both the context of the interaction and the situation (Koay et al. (2007b)). People will tolerate closer approaches for physical interactions (e.g. performing a joint task or handing over an object), as opposed to purely verbal interactions (when giving commands etc.) or where the robot is just passing by and not directly interacting with the human. In the 2005 HRI experiments for WP3.1 we found that the Human to Robot (HR) approach distances that people took when approaching a robot were highly correlated with their Robot to Human (RH) approach preferences for when the robot approached them. Participants' preferences for the factors of robot appearance and robot height were explored (Walters (2008)). A possible finding is that the participants fall into two main groups: A majority group who preferred a tall humanoid robot (D), and a minority who chose a small mechanoid robot (A). It may be that the first group may have chosen their preferred robot type because they perceive it as having more humanlike social qualities. Whereas the latter group perhaps preferred a robot which they believed would be unobtrusive, both physically and in terms of requiring social attention from the user. The HR proxemic findings have been collected together to develop a predictive and interpretive framework for HR proxemic behaviour, which is proposed as a first stage for further investigations towards a more complete theory of HR proxemics (cf. Walters (2008)). The findings from the Long-term HRI trials with regard to participants preference changes due to habituation are summarised here, more details can be found in Koay et al. (2007b): Overall, the participants tended to allow all robot types, with both mechanical and more humanoid appearances, to approach closer by the end of the five week habituation period. Initially, participants tended to keep the humanoid appearance robot at a further distance overall than the robot with the more mechanical appearance. However, by the end of the fifth week of habituation, there was significantly less difference in approach distance preferences for all robot appearance types. A possible explanation is that people may continue to refine their perception of the humanoid robot, even after an extended period of contact. Initially, participants also tended to prefer the robots to approach indirectly from the front right or front left direction (relative to the participant). This is in agreement with previous findings from our previous HRI trials (D3.2005). However, by week five most of the long-term participants preferred which ever robot they interacted with to approach directly from the front. Not unexpectedly, participants who preferred closer approaches by the robot also tended to allow the robot hand to reach closer towards them while handing over a can. Participants who preferred further approach distances, preferred to reach out towards the robot hand themselves rather than allow the robot hand to reach toward them. Most participants (11 from 12) preferred the robot to hand them an object with it's right hand and this was not correlated to the handedness of the participants. The robots which were to be used for the KEs were primarily prototype engineering platforms to demonstrate the technologies developed for the COGNIRON project. Mostly, they were not able to be safely deployed with inexperienced users. From discussions with the partners from LAAS, EPFL, IPA in the first part of 2007, it became apparent that their robot platforms for KEs 2, 3a and 3b would not be available until close to the end of the project and too late to perform live HRI trials and would also likely not to be available until too late in the year to use for possible live experiments to carry out the UEE for these KEs. Previously a new Video-based HRI (VHRI) methodology was developed to prototype, explore and pilot ideas, concepts and fertile areas for investigation before investigation and verification in more resource intensive live HRI trials (cf. Woods et al. (2006a), Walters et al. (2007d), & Walters et al. (2007c)). It was decided therefore to use the VHRI methodology to perform the UEE. For KE2, the WP3.1 team members supervised, shot, edited and produced the UEE Trial video (Figure 2). For the KE3 (a and b) VHRI trial videos, the UH WP3.1 team co-operated with the RA4 team members from UH, EPFL and IPA to develop and create the trial videos. The evaluation and data collection methods were also developed and piloted by the WP3.1 team. Two methods were chosen for collecting data: First by means of structured interviews, and secondly by questionares. The analysis of the interviews involved viewing video recordings of each interview by two experimenters. Each experimenter would take notes of the issues raised during the conversation, stopping the video whenever necessary to disambiguate meaning. The experimenters were allowed to discuss any issue during this phase. After the viewing the two experimenters together would compare notes and write a report of the interview. The analysis of the questionnaire focused on mainly quantitative descriptions of the answers given by the participants. Full details of the UEE methodology development, data collection, analyses and conclusions can be found in the technical report (Appendix to D7.2007) and the main findings in the RA7 deliverable report (D7.2007). some snapshots during and around the user studies The participants were asked to rate how they felt about some aspects of the robot movements and handing over actions. Firstly, it seems that using interviews in combination with the KE2 video offered information that was both in-depth and wide-ranging. The participants did, as a group, offer insights into not only how they felt about the behaviour of the robots and humans in this video in particular, but used this as a starting point to an exploration of their perception of how possible interactions with a similar system would impact their own everyday experience. Secondly, it seems that participants appreciated the robot's navigation and handing-over behaviour as both being safe and making sense. Issues of the naturalness and ease were not related to these measures, possibly reflecting a consideration of the different capabilities that robots have to humans when navigating and interacting objects/individuals. WP3.2 Posture and positioning WP3.2. addresses the role of posture and positioning in task-oriented robothuman interaction. The focus of the work is based on user studies on how robot and human coordinate their actions in a shared space, including a multiple room scenario, where spatial coordination is necessary for natural communication between them. The work is related to both CF-SOC, "Socially acceptable interaction with regard to spaces" and CF-LG, "Multimodal dialogue including robust speech understanding for natural communication". This workpackage is also closely interworked with RA-5, taking advantage of the Human-Augmented Mapping System (HAM), enabling user-trials according to the targeted KE1, "The Home Tour". The first and second period of the Cogniron project involved two data collections on Human-Robot Interaction with help of the Wizard-of-Oz methodology (Green et al. (2004); Green et al. (2006); Hüttenrauch et al. (2006a)), where users were instructed to show the robot a single-room environment and teach it objects and places. The analysis of these trials informed the assessment of distances in human-robot interaction according to Hall's Proxemics and spatial constellations according to Kendon's F-formations (Hüttenrauch et al. (2006)). This work was continued in the third period by in-depth studies on the observed interaction dynamics in the previously collected data, as it was found that the static descriptions of spatial formations and distances (at certain points in time during interaction) insufficiently explained the observed movement patterns of both the user and the robot. It was shown that an analysis into the spatiality of human-robot interaction benefits from coupling the evolving joint actions, in terms of interaction episodes and signaled multimodal and spatial transitions between them (Hüttenrauch et al. (2006)). Another discovery reported from the third phase in coordination with RA1, was the set of postures and movements in a communicative scenario. Based on observations of human-robot interaction, a notion of "spatial prompting" has been developed. In spatial prompting, an action of the robot incites the user to a particular spatial action or movement (Green & Hüttenrauch (2006)). In the final period of the Cogniron project the human-robot interaction studies on posture and positioning were extended to include multiple rooms. Two different sets of studies were conducted: One study was carried out in Stockholm, Sweden, focusing on letting people in their own home try a MobileRobots PeopleBot according to the "Home Tour" key experiment scenario. A second set of multiple-rooms user trials, including two iterations, was conducted in Germany in the UB "robot flat" using the integrated KE1 demonstrator robot BIRON. Both set of trials were intended to gain insights on the following issues:

In particular for the trial which took place in the subject's own home environment:

Another research approach originated from observations in a pilot trial (Topp et al. (2006)) on conducting a multiple-room study which involved teaching the robot rooms, regions and locations to perform actions, and different objects to a robot have shown that subjects placed themselves in certain constellations and distances towards the robot according to what they were trying to show the robot. Expressed as a hypothesis, we included in the multiple-room investigation the research question: Based upon early observations and cooperation with RA5 (Topp et al., 2006, RA5 report D5.2007) this hypothesis was operationalized as follows: Rooms/regions are shown to the robot and given a label by placing the robot within this room/region; locations are characterized by the user and the robot standing in a constellation opening a shared space between them (e.g., with a so called L-Shape positioning). We also expected users to give a spatial reference and/or also exhibit a deictic gesture when giving a label to a location; i.e. locations have a direction where something can be done. Objects were finally expected to be shown and named to the robot by picking objects up and showing them to the robot directly with a deictic gesture; i.e., facing one-another. An observation from both multiple-room studies is that homes differ from laboratory settings so fundamentally that we advocate for a true environment immersion when conducting studies on spatial relationships and management for human-robot interaction targeted at a home environment. Homes and rooms where people live were encountered as being cramped with furniture, objects, and decorations of different sorts and materials. The most interesting aspects for a wheel-based robot1 system are of course the available free-space for navigation, the floor material, detectable obstacles, children and pets on the floor, and any other threshold or edge in the flooring. The homes visited in the Stockholm area were not adapted to the type of robot used for trials, nor are the Peoplebot robots especially designed for use in homes. Consequently we observed users who tried to collaborate and make the robot system work for them. This was by moving parts of the furniture out of the robot's way, or by switching to direct navigation commands ("turn right/left", "forward", etc.) in home trials produced examples of these incidents of interactive assistance to the robot, although participants were not asked for this during the pre-trial introduction. We have identified underlying patterns of incidents and interaction styles, spatial management, positioning and multimodal communication, across the different locations and the two trial set-ups (i.e. both in different participants' homes in Sweden and in the Bielefeld study). It can be observed that the showing of small objects that can be handheld seems to be preferably conducted in a vis-a`-vis position (Hüttenrauch et al., 2006b). This is also at a distance that makes showing the object to the robot's camera comfortably possible. Robot is shown object 'remote control' - note the vis-à-vis positioning. Inset shows robot camera view of the interaction These are regular behaviors and are illustrated by examples of different participants and different items being shown and taught to the robot. Showing the robot objects, the view from the robot's camera Another example where very similar gestures and positioning in showing the robot the same type of item was found is depicted in Figure 10 where it can be seen that both robot trial users are teaching the robot about the table by leaning on it with one hand. Regarding the questions of how narrow passages such as doors are negotiated, we made some findings regarding joint passing behaviors:

Methodology Lessons Learned During the design of the multiple-room studies, through their execution and upon reflecting on them, we gained many insights of how conduct this type of user-study with robots outside the laboratory. In this section some selected findings are described and discussed.

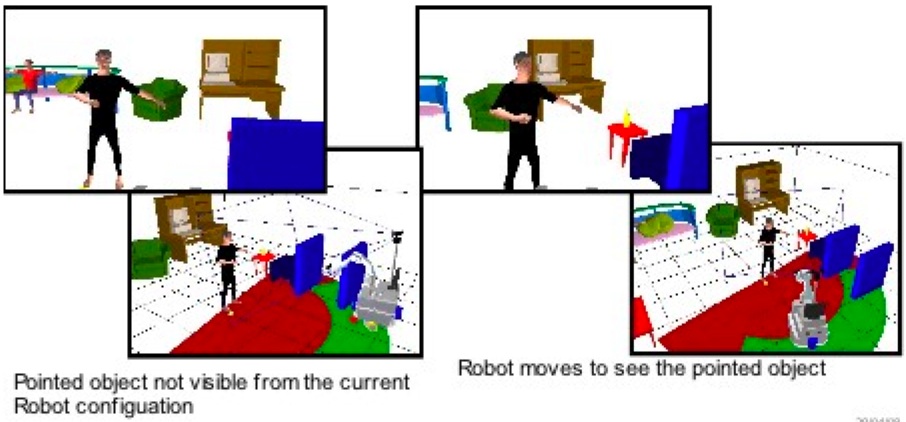

WP3.3 Models and algorithms for motion in presence and in the vicinity of humans The research conducted in this work-package followed the general goals defined in the previous deliverable reports (D3.2005-7) regarding the design and integration of a "human aware" navigation and manipulation planner relevant for COGNIRON's Curious Robot Key experiment (KE2). Progress has been made mainly on further development of the algorithms of the the Human Aware Manipulation Planner (HAMP), Human Aware Navigation Planner (HANP) and Perspective Placement Mechanism (PPM) (cf. Sisbot (2007), Sisbot et al. (2007a) & Sisbot et al. (2008)). Human Aware Navigation Planner (HANP) Robot navigation in the presence of humans raises new issues for motion planning and control since the humans safety and comfort must be taken explicitly into account. We claim that a human-aware motion planner must not only elaborate safe robot paths, but also plan good, socially acceptable and legible paths. We have build a planner that takes explicitly into account the human partner by reasoning about his accessibility, his vision field and potential shared motions. This planner is part of a human-aware motionmanipulation planning and control system that we aim to develop in order to achieve motion and manipulation tasks in a collaborative way with the human. Human Aware Manipulation Planner (HAMP) The HAMP algorithm is based on user studies (cf. Koay et al. (2007)) and produces not only physically safe paths, but also comfortable, legible, mentally safe paths. Our approach is based on separating the problem of manipulation for the KE2 scenario, which involves a robot giving an object to the human, into 3 stages:

Human Aware Manipulation Planner These items are calculated by taking explicitly into account the human partner's safety and comfort. Not only the kinematic structure of the human, but also his vision field, his accessibility, his preferences and his state is reasoned in the planning loop in order to have a safe and comfortable interaction. In each steps of the items stated above, the planner ensures humans safety by avoiding any possible collision between the robot and the human. Perspective Placement Mechanism (PPM) As in every robot motion planner, the robot has to plan its motion from its current actual position (or configuration), through a path to reach a destination point (or configuration). The PerSpective Placement (PSP) mechanism is concerned with this last part of motion planning. We define PSP as a set of robot tasks which find a destination point for a robot according to certain constraints by performing perspective taking and mental rotation in order to faciltate interaction and ease communication with the human partner. Perspective Placement Mechanism In order to interact with the human, the robot has to find how to place itself in a configuration where it has direct contact with this human. This constraint helps us to reduce the search space to find a destination point. This search can be subdivided into two phases: first, the robot has to find positions that belong to the human's attentional field of view and second, validating the positions obtained for visual contact and preventing big visual obstructions from blocking the robot's perception of the scene. WP3.4 Requirements for Contextual Interpretation of Body Postures and Human Activity WP3.4. investigates gestures, body postures and movements that occur naturally in situations such as those described for the KEs. This work uses annotated databases of image sequences of human activities and social behaviour, relevant to the KEs, in order to consolidate and expand the taxonomies initiated at UH and KTH in years 2 and 3. Interaction principles Approachability Approachability, responsiveness and attentiveness are aspects of communication that have to do with the liveliness and ability to actively perceive others. To achieve approachability it is necessary to provide appropriate feedback regarding the quality and attitudes to perception and contact experienced during interaction (Green 2007). This is something that is a pervasive aspect of human-human communication, but has to be made explicit for robots:

Adjustable Autonomy Autonomy is an aspect of robotics that has implications for the quality of interaction and has been proposed as a way of managing the initiative and responsibility in human-robot teamwork (Sierhuis et al, 2003). In Cogniron this has been investigated in RA1 where behaviour of BIRON has been adapted to the quality of the ongoing communication (cf. D1.2007 and Li 2007). This can be formulated as a principle for how a companion should behave to appropriately handle the level of autonomy in an interactive situation:

Spatial regulation Another aspect of human-robot interaction concerns the spatial aspects of the interaction. The fact that a robot companion is physically present means that aspects of spatiality are important for the way the robot is understood and looked upon by its users. The ability of the robot to regulate the movements such as social distances, positioning and posture is an important aspect of interactive behaviours. Spatial influence and regulation of spatial behaviour have an impact on communicative behaviour, but the maxims of Grice (1975) cannot be applied A set of maxims related to spatial regulation and predictability has been formulated, the latter being important not to startle users as the robot moves:

2004 - D3.1.1 Results from evaluation of user studies on interaction styles and personal spaces RA3 presentation(by Mick Walters (UH))

Below are only listed some of the RA-related publications, please see the Publications page for more.

|

![]()

![]()

![]()

An Integrated Project funded by the European Commission's Sixth Framework Programme

Video 1

Video 1 Video 2

Video 2